The smps.log can sometimes report sporadic errors indicating that it may have lost a connection to a server running a SiteMinder WebAgent:

[4280/5660][Wed Nov 05 2014 08:32:30][CServer.cpp:2283][ERROR][sm-Server-01090] Failed to receive request on session # 185 : <hostname>/<IP Address>:<Port>. Socket error 10053

If this error is occurring in the smps.log, you can check the webagent log of the server where the webagent is installed. This can be found by using the <hostname> reported in the smps.log error. The webagent log will have a correlating timestamp at or near the time of the Socket error reported in the smps.log.

NOTE: You should also keep in mind that the “Date Modified” timestamp of the webagent log can sometimes be different because once the webagent is stopped and restarted, a new log is only created once a request is made for a resource under the default website within IIS7. If this is an environment where the usage is low, there could be a different between when the error is reported in the smps.log on the policy server and when the webagent is restarted.

One way to confirm that the “Idle Time-Out” threshold is being reached in the DefaultAppPool in IIS7 is to check the Windows Event Viewer. You will also find that the System Log is reporting the following message:

Information about Event ID 5186 can be found at: http://technet.microsoft.com/en-us/library/cc735034(v=ws.10).aspx

Information about configuring Idle Time-out settings can be found at: http://technet.microsoft.com/en-us/library/cc771956(v=ws.10).aspx

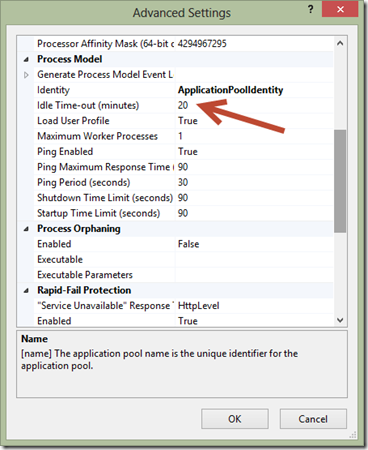

By default, IIS7 sets application pools to “time-out” after 20 minutes of inactivity. If you do not have any activity to your site within 20 minutes the application pool will shut down – freeing up those system resources. By doing this, the webagent tied to the default application pool will also be shut down. Then the next time a request comes into the site IIS7 will automatically restart the application pool and serve up the requested pages and in turn, start the webagent. It will also mean that the first request – the one that causes the application pool to restart will be very slow. This is a result of the process starting, loading the required assemblies (like .NET) and finally loading the requested pages.

To extend the length of the time-out setting, simply change it from the default of 20 (minutes) to the value you choose. You can also adjust the setting to 0 (zero) which effectively disables the timeout so that the application pool will never shut down due to being idle.

To make this change, open Server Manager -> Expand the Roles -> Expand the Web Server (IIS) node. Then click on the Web Server (IIS) node -> Expand the node with your local server name and click on the Application Pools icon. You will then see a list of the application pools that are defined on your server. In the right-hand pane click the option for Advanced Settings.Source: http://bradkingsley.com/iis7-application-pool-idle-time-out-settings/

Restart IIS7 once the changes have been made. NOTE: When restarting IIS7, open Task Manager and make sure that the LLAWP.exe process fully terminates before starting IIS7.

After making the changes and IIS7 has been restarted, monitor the smps.log and the webagent logs to verify changes have taken effect.

———————————————————————————————————————————————————-

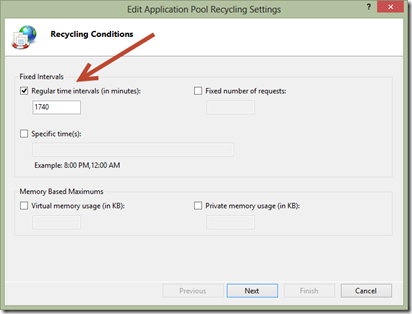

Why is the IIS default app pool recycle set to 1740 minutes?

Microsoft IIS Server has what appears to be an odd default for the application pool recycle time. It defaults to 1740 minutes, which is exactly 29 hours. I’ve always been a bit curious where that default came from. If you’re like me, you may have wondered too.

Wonder no longer! While at the MVP Summit this year in Bellevue WA I had the privilege again of talking with the IIS team. Wade Hilmo was there too. Somehow in the conversation a discussion about IIS default settings came up, which included the odd 1740 minutes for the app pool recycle interval. Wade told the story of how the setting came into being, and he granted me permission to share.

As you can imagine, many decisions for the large set of products produced by Microsoft come about after a lot of deliberation and research. Others have a geeky and fun origin. This is one of the latter.

The 1740 story

Back when IIS 6 was being developed—which is the version that introduced application pools—a default needed to be set for the Regular Time Interval when application pools are automatically recycled.

Wade suggested 29 hours for the simple reason that it’s the smallest prime number over 24. He wanted a staggered and non-repeating pattern that doesn’t occur more frequently than once per day. In Wade’s words: “you don’t get a resonate pattern”. The default has been 1740 minutes (29 hours) ever since!

That’s a fun little tidbit on the origin of the 1740. How about in your environment though? What is a good default?

Practical guidelines

First off, I think 29 hours is a good default. For a situation where you don’t know the environment, which is the case for a default setting, having a non-resonate pattern greater than one day is a good idea.

However, since you likely know your environment, it’s best to change this. I recommendsetting to a fixed time like 4:00am if you’re on the East coast of the US, 1:00am on the West coast, or whatever seems to make sense for your audience when you have the least amount of traffic. Setting it to a fixed time each day during low traffic times will minimize the impact and also allow you to troubleshoot easier if you run into any issues. If you have multiple application pools it may be wise to stagger them so that you don’t overload the server with a lot of simultaneous recycles.

Note that IIS overlaps the app pool when recycling so there usually isn’t any downtime during a recycle. However, in-memory information (session state, etc) is lost. See this video if you want to learn more about IIS overlapping app pools.

You may ask whether a fixed recycle is even needed. A daily recycle is just a band-aid to freshen IIS in case there is a slight memory leak or anything else that slowly creeps into the worker process. In theory you don’t need a daily recycle unless you have a known problem. I used to recommend that you turn it off completely if you don’t need it. However, I’m leaning more today towards setting it to recycle once per day at an off-peak time as a proactive measure.

My reason is that, first, your site should be able to survive a recycle without too much impact, so recycling daily shouldn’t be a concern. Secondly, I’ve found that even well behaving app pools can eventually have something sneak in over time that impacts the app pool. I’ve seen issues from traffic patterns that cause excessive caching or something odd in the application, and I’ve seen the very rare IIS bug (rare indeed!) that isn’t a problem if recycled daily. Is it a band-aid? Possibly, but if a daily recycle keeps a non-critical issue from bubbling to the top then I believe that it’s a good proactive measure to save a lot of troubleshooting effort on something that probably isn’t important to troubleshoot. However, if you think you have a real issue that is being suppressed by recycling then, by all means, turn off the auto-recycling so that you can track down and resolve your issue. There’s no black and white answer. Only you can make the best decision for your environment.

Idle Time-out

While on the topic of app pool defaults, there is one more that you should change with every new server deployment. The Idle Time-out should be set to 0 unless you are doing bulk hosting where you want to keep the memory footprint per site as low as possible.

If you have a just a few sites on your server and you want them to always load fast then set this to zero. Otherwise, when you have 20 minutes without any traffic then the app pool will terminate so that it can start up again on the next visit. The problem is that the first visit to an app pool needs to create a new w3wp.exe worker process which is slow because the app pool needs to be created, ASP.NET or another framework needs to be loaded, and then your application needs to be loaded. That can take a few seconds. Therefore I set that to 0 every chance I have, unless it’s for a server that hosts a lot of sites that don’t always need to be running.

There are other settings that can be reviewed for each environment but the two aforementioned settings are the two that should be changed almost every time.

Hopefully you enjoyed knowing about the 29 hour default as much as I did, even if just for fun. Happy IISing.

Credits:

http://weblogs.asp.net/owscott/why-is-the-iis-default-app-pool-recycle-set-to-1740-minutes

Contention, poor performance, and deadlocks when you make calls to Web services from an ASP.NET application

Symptoms

When you make calls to Web services from a Microsoft ASP.NET application, you may experience contention, poor performance, and deadlocks. Clients may report that requests stop responding (or “hang”) or take a very long time to execute. If a deadlock is suspected, the worker process may be recycled. You may receive the following messages in the application event log.

- If you are using Internet Information Services (IIS) 5.0, you receive the following messages in the Application log:

Event Type: Error Event Source: ASP.NET 1.0.3705.0 Event Category: None Event ID: 1003 Date: 5/4/2003 Time: 6:18:23 PM User: N/A Computer: <ComputerName> Description: aspnet_wp.exe (PID: <xxx>) was recycled because it was suspected to be in a deadlocked state. It did not send any responses for pending requests in the last 180 seconds. - If you are using IIS 6.0, you receive the following messages in the Application log:

Event Type: Warning Event Source: W3SVC-WP Event Category: None Event ID: 2262 Date: 5/4/2003 Time: 1:02:33 PM User: N/A Computer: <ComputerName> Description: ISAPI 'C:\Windows\Microsoft.net\Framework\v.1.1.4322\aspnet_isapi.dll' reported itself as unhealthy for the following reason: 'Deadlock detected'. - If you are using IIS 6.0, you receive the following messages in the System log:

Event Type: Warning Event Source: W3SVC Event Category: None Event ID: 1013 Date: 5/4/2003 Time: 1:03:47 PM User: N/A Computer: <ComputerName> Description: A process serving application pool 'DefaultAppPool' exceeded time limits during shut down. The process id was '<xxxx>'.

You may also receive the following exception error message when you make a call to the HttpWebRequest.GetResponse method:

You may also receive the following exception error message in the browser:

Note This article also applies to applications that make HttpWebRequest requests directly.

Cause

This problem might occur because ASP.NET limits the number of worker threads and completion port threads that a call can use to execute requests.

Typically, a call to a Web service uses one worker thread to execute the code that sends the request and one completion port thread to receive the callback from the Web service. However, if the request is redirected or requires authentication, the call may use as many as two worker threads and two completion port threads. Therefore, you can exhaust the managed ThreadPool when multiple Web service calls occur at the same time.

For example, suppose that the ThreadPool is limited to 10 worker threads and that all 10 worker threads are currently executing code that is waiting for a callback to execute. The callback can never execute, because any work items that are queued to the ThreadPool are blocked until a thread becomes available.

Another potential source of contention is the maxconnection parameter that the System.Net namespace uses to limit the number of connections. Generally, this limit works as expected. However, if many applications try to make many requests to a single IP address at the same time, threads may have to wait for an available connection.

Resolution

To resolve these problems, you can tune the following parameters in the Machine.config file to best fit your situation:

- maxWorkerThreads

- minWorkerThreads

- maxIoThreads

- minFreeThreads

- minLocalRequestFreeThreads

- maxconnection

- executionTimeout

To successfully resolve these problems, take the following actions:

- Limit the number of ASP.NET requests that can execute at the same time to approximately 12 per CPU.

- Permit Web service callbacks to freely use threads in the ThreadPool.

- Select an appropriate value for the maxconnections parameter. Base your selection on the number of IP addresses and AppDomains that are used.

Note The recommendation to limit the number of ASP.NET requests to 12 per CPU is a little arbitrary. However, this limit has proved to work well for most applications.

maxWorkerThreads and maxIoThreads

ASP.NET uses the following two configuration settings to limit the maximum number of worker threads and completion threads that are used:

<processModel maxWorkerThreads="20" maxIoThreads="20">The maxWorkerThreads parameter and the maxIoThreads parameter are implicitly multiplied by the number of CPUs. For example, if you have two processors, the maximum number of worker threads is the following:

minFreeThreads and minLocalRequestFreeThreads

ASP.NET also contains the following configuration settings that determine how many worker threads and completion port threads must be available to start a remote request or a local request:

<httpRuntime minFreeThreads="8" minLocalRequestFreeThreads="8">If there are not sufficient threads available, the request is queued until sufficient threads are free to make the request. Therefore, ASP.NET will not execute more than the following number of requests at the same time:

Note The minFreeThreads parameter and the minLocalRequestFreeThreads parameter are not implicitly multiplied by the number of CPUs.

minWorkerThreads

As of ASP.NET 1.0 Service Pack 3 and ASP.NET 1.1, ASP.NET also contains the following configuration setting that determines how many worker threads may be made available immediately to service a remote request.

<processModel minWorkerThreads="1">Threads that are controlled by this setting can be created at a much faster rate than worker threads that are created from the CLR’s default “thread-tuning” capabilities. This setting enables ASP.NET to service requests that may be suddenly filling the ASP.NET request queue due to a slow-down on a back end server, a sudden burst of requests from the client end, or something similar that would cause a sudden rise in the number of requests in the queue. The default value for the minWorkerThreads parameter is 1. We recommend that you set the value for the minWorkerThreads parameter to the following value.

minWorkerThreads = maxWorkerThreads / 2By default, the minWorkerThreads parameter is not present in either the Web.config file or the Machine.config file. This setting is implicitly multiplied by the number of CPUs.

maxconnection

The maxconnection parameter determines how many connections can be made to a specific IP address. The parameter appears as follows:

<connectionManagement>

<add address="*" maxconnection="2">

<add address="http://65.53.32.230" maxconnection="12">

</connectionManagement>If the application’s code references the application by hostname instead of IP address, the parameter should appear as follows:

<connectionManagement>

<add address="*" maxconnection="2">

<add address="http://hostname" maxconnection="12">

</connectionManagement>Finally, if the application is hosted on a port other than 80, the parameter has to include the non-standard port in the URI, similar to the following:

<connectionManagement>

<add address="*" maxconnection="2">

<add address="http://hostname:8080" maxconnection="12">

</connectionManagement>The settings for the parameters that are discussed earlier in this article are all at the process level. However, the maxconnection parameter setting applies to the AppDomain level. By default, because this setting applies to the AppDomain level, you can create a maximum of two connections to a specific IP address from each AppDomain in your process.

executionTimeout

ASP.NET uses the following configuration setting to limit the request execution time:

<httpRuntime executionTimeout="90"/>You can also set this limit by using the Server.ScriptTimeout property.

Note If you increase the value of the executionTimeout parameter, you may also have to modify the processModel responseDeadlockInterval parameter setting.

Recommendations

The settings that are recommended in this section may not work for all applications. However, the following additional information may help you to make the appropriate adjustments.

If you are making one Web service call to a single IP address from each ASPX page, Microsoft recommends that you use the following configuration settings:

- Set the values of the maxWorkerThreads parameter and the maxIoThreads parameter to 100.

- Set the value of the maxconnection parameter to 12*N (where N is the number of CPUs that you have).

- Set the values of the minFreeThreads parameter to 88*N and the minLocalRequestFreeThreads parameter to76*N.

- Set the value of minWorkerThreads to 50. Remember, minWorkerThreads is not in the configuration file by default. You must add it.

Some of these recommendations involve a simple formula that involves the number of CPUs on a server. The variable that represents the number of CPUs in the formulas is N. For these settings, if you have hyperthreading enabled, you must use the number of logical CPUs instead of the number of physical CPUs. For example, if you have a four-processor server with hyperthreading enabled, then the value of N in the formulas will be 8 instead of 4.

Note When you use this configuration, you can execute a maximum of 12 ASP.NET requests per CPU at the same time because 100-88=12. Therefore, at least 88*N worker threads and 88*N completion port threads are available for other uses (such as for the Web service callbacks).

For example, you have a server with four processors and hyperthreading enabled. Based on these formulas, you would use the following values for the configuration settings that are mentioned in this article.

<system.web>

<processModel maxWorkerThreads="100" maxIoThreads="100" minWorkerThreads="50"/>

<httpRuntime minFreeThreads="704" minLocalRequestFreeThreads="608"/>

</system.web>

<system.net>

<connectionManagement>

<add address="[ProvideIPHere]" maxconnection="96"/>

</connectionManagement>

</system.net>Also, when you use this configuration, 12 connections are available per CPU per IP address for each AppDomain. Therefore, in the following scenario, very little contention occurs when requests are waiting for connections, and the ThreadPool is not exhausted:

- The web hosts only one application (AppDomain).

- Each request for an ASPX page makes one Web service request.

- All requests are to the same IP address.

However, when you use this configuration, scenarios that involve one of the following will probably use too many connections:

- Requests are to multiple IP addresses.

- Requests are redirected (302 status code).

- Requests require authentication.

- Requests are made from multiple AppDomains.

In these scenarios, it is a good idea to use a lower value for the maxconnection parameter and higher values for the minFreeThreads parameter and theminLocalRequestFreeThreads parameter.

Credits:

https://support.microsoft.com/en-us/kb/821268

.NET and ADO.NET Data Service Performance Tips for Windows Azure Tables

We have collected the common issues that users have come across while using Windows Azure Table and posted some solutions. Some of these are .NET related or ADO.NET Data Services (aka Astoria) related. If you have alternate solutions, please let us know. If you feel we have missed something important, please let us know and we would like to cover them. We hope that the list helps 🙂

1> Default .NET HTTP connections is set to 2

This is a notorious one that has affected many developers. By default, the value for this is 2. This implies that only 2 concurrent connections can be maintained. This manifests itself as “underlying connection was closed…” when the number of concurrent requests is greater than 2. The default can be increased by setting the following in the application configuration file OR in code.

| Config file: |

| <system.net> |

| <connectionManagement> |

| <add address = “*” maxconnection = “48” /> |

| </connectionManagement> |

| </system.net> |

| In code: |

| ServicePointManager.DefaultConnectionLimit = 48; |

The exact number depends on your application. http://support.microsoft.com/kb/821268 has good information on how to set this for server side applications.

One can also set it for a particular uri by specifying the URI in place of “*”. If you are setting it in code, you could use the ServicePoint class rather than the ServicePointManager class i.e.:

ServicePoint myServicePoint = ServicePointManager.FindServicePoint(myServiceUri);

myServicePoint.ConnectionLimit = 48.

2> Turn off 100-continue (saves 1 roundtrip)

What is 100-continue? When a client sends a POST/PUT request, it can delay sending the payload by sending an “Expect: 100-continue” header.

- The server will use the URI plus headers to ensure that the call can be made.

2. The server would then send back a response with status code 100 (Continue) to the client.

3. The client would send the rest of the payload.

This allows the client to be notified of most errors without incurring the cost of sending that entire payload. However, once the entire payload is received on the server end, other errors may still occur. When using .NET library, HttpWebRequest by default sends “Expect: 100-Continue” for all PUT/POST requests (even though MSDN suggests that it does so only for POSTS).

In Windows Azure Tables/Blobs/Queue, some of the failures that can be tested just by receiving the headers and URI are authentication, unsupported verbs, missing headers, etc. If Windows Azure clients have tested the client well enough to ensure that it is not sending any bad requests, clients could turn off 100-continue so that the entire request is sent in one roundtrip. This is especially true when clients send small payloads as in the table or queue service. This setting can be turned off in code or via a configuration setting.

| Code: |

| ServicePointManager.Expect100Continue = false; // or on service point if only a particular service needs to be disabled. |

| Config file: |

| <system.net> |

| <settings> |

| <servicePointManager expect100Continue=“false” /> |

| </settings> |

| </system.net> |

Before turning 100-continue off, we recommend that you profile your application examining the effects with and without it.

3> To improve performance of ADO.NET Data Service deserialization

When you execute a query using ADO .Net data services, there are two important names – the name of the CLR class for the entity, and the name of the table in Windows Azure Table. We have noticed that when these names are different, there is a fixed overhead of approximately 8-15ms for deserializing each entity received in a query.

There are two workarounds until this is fixed in Astoria:

1> Rename your table to be the same as the class name.

So if you have a Customer entity class, use “Customer” as the table name instead of “Customers”.

| from customer in context.CreateQuery<Customer>(“Customer”) |

| where a.PartitionKey == “Microsoft” select customer; |

2> Use ResolveType on the DataServiceContext

| public void Query(DataServiceContext context) |

| { |

| // set the ResolveType to a method that will return the appropriate type to create |

| context.ResolveType = this.ResolveEntityType; |

| … |

| } |

| public Type ResolveEntityType(string name) |

| { |

| // if the context handles just one type, you can return it without checking the |

| // value of “name”. Otherwise, check for the name and return the appropriate |

| // type (maybe a map of Dictionary<string, Type> will be useful) |

| Type type = typeof(Customer); |

| return type; |

| } |

4> Turn entity tracking off for query results that are not going to be modified

DataServiceContext has a property MergeOption which can be set to AppendOnly, OverwriteChanges, PreserveChanges and NoTracking. The default is AppendOnly. All options except NoTracking lead to the context tracking the entities. Tracking is mandatory for updates/inserts/deletes. However, not all applications need to modify the entities that are returned from a query, so there really is no need to have change tracking on. The benefit is that Astoria need not do the extra work to track these entities. Turning off entity tracking allows the garbage collector to free up these objects even if the same DataContext is used for other queries. Entity tracking can be turned off by using:

| context.MergeOption = MergeOption.NoTracking; |

However, when using a context for updates/inserts/deletes, tracking has to be turned on and one would use PreseveChanges to ensure that etags are always updated for the entities.

5> All about unconditional updates/deletes

ETags can be viewed as a version for entities. These can be used for concurrency checks using the If-Match header during updates/deletes. Astoria maintains this etag which is sentETags can be viewed as a version for entities. These can be used for concurrency checks using the If-Match header during updates/deletes. Astoria maintains this etag which is sent with every entity entry in the payload. To get into more details, Astoria tracks entities in the context via context.Entities which is a collection of EntityDescriptors. EntityDescriptor has an “Etag” property that Astoria maintains. On every update/delete the ETag is sent to the server. Astoria by default sends the mandatory “If-Match” header with this etag value. On the server side, Windows Azure table ensures that the etag sent in the If-Match header matches our Timestamp property in the data store. If it matches, the server goes ahead and performs the update/delete; otherwise the server returns a status code of 412 i.e. Precondition failed, indicating that someone else may have modified the entity being updated/deleted. If a client sends “*” in the “If-Match” header, it tells the server that an unconditional update/delete needs to be performed i.e. go ahead and perform the requested operation irrespective of whether someone has changed the entity in the store. A client can send unconditional updates/deletes using the following code:

| context.AttachTo(“TableName”, entity, “*”); |

| context.UpdateObject(entity); |

However, if this entity is already being tracked, client will be required to detach the entity before attaching it:

| context.Detach(entity); |

Added on April 28th 2009

6> Turning off Nagle may help Inserts/Updates

We have seen that turning nagle off has provided significant boost to latencies for inserts and updates in table. However, turning nagle off is known to adversely affect throughput and hence it should be tested for your application to see if it makes a difference.

This can be turned off either in the configuration file or in code as below.

| Code: |

| ServicePointManager.UseNagleAlgorithm = false; |

| Config file: |

| <system.net> |

| <settings> |

| <servicePointManager expect100Continue=“false” useNagleAlgorithm=”false”/> |

| </settings> |

| </system.net> |